We're still not sure about these facts, but our Milky Way Galaxy contains around a trillion stars, and there are estimated to be a trillion galaxies in our Universe. Doing a bit of math, there are a trillion trillion stars in our Universe.

Astrophysicists seem to find more and more evidence that intelligent life is rare, but with that many solar systems, there certainly could be intelligent life out there.

'Out there' is meant literally, because they ain't (sp?) here. Why?

Our galaxy is about 100,000 light-years across, so any star within 100 light-years of Earth is very close to us.

(As a refresher, a light year is the distance light can travel in one year. Light travels at about 180,000 miles per second, so a light year is about 6 trillion miles).

Einstein famously said that the speed of light in a vacuum is the fastest possible speed. One hundred years later, we've confirmed this in many ways. (Yes, Star Trek and Star Wars have variations of a warp drive enabling them to travel much faster than light, but you might know these are fictional shows).

It took us about three days to get to the Moon, traveling about 6 miles per second. At this speed, it would take us over one million years to travel 100 light years.

No spaceship traveling with people or aliens is going to do this. Heck, it will take us 6 months to get to Mars. Sending robots is no problem. Sending people will likely result in death. Elon Musk, who wants to send people to Mars, said that he would not want to go because he doesn't want to die.

------------------------------------

Let's move on to other reasons. A number of scientists and others have said that they've seen alien body parts and/or alien spaceship parts on Earth, but the government has kept this from us. This can't be true because someone would have sneaked them out or taken pictures of them. After all, it's not like this is a big national security issue. Ben Franklin said, "Three can keep a secret if two are dead". There's no way that we have physical evidence of aliens on Earth without someone bringing it forth. Yes, Congress has had hearings about aliens coming to Earth, but I'll bet my right arm that no evidence will be provided.

---------------------------------

Furthermore, if aliens had the incredible technology to get to Earth, wouldn't they be able to land here without crashing?

And why come to Earth? Certainly not for our resources as the aliens did in the excellent movie, Independence Day. An advanced civilization would have recycling down so they would not run out of resources. Even if they did, they could find them somewhere in their solar system, without trying to destroy a populated planet.

They would only come to make contact with us. They'd stay in orbit and communicate with us, and ask if they could land. Sure, we'd say. How cool would that be?

The only realistic hope is that aliens would try to communicate with us. SETI's purpose is to look for such things. Their message would take 100 years to get here, and our response would take 100 years to get back. Getting such a message would be the event of the millennium.

But you know what they say about long distance relationships.

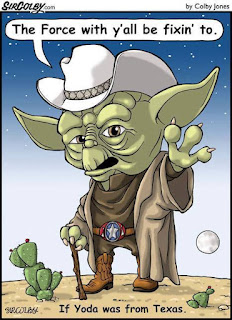

PS: We'd love to have Yoda in Texas.